Artificial Intelligence (AI)-aided Disease Prediction

1Academy of Medical Engineering and Translational Medicine, Tianjin University, 300072, Tianjin, China

2Tianjin Key Laboratory of Brain Science and Neural Engineering, Tianjin University, 300072, Tianjin, China

*Correspondence to: Zhe Liu, E-mail: zheliu@tju.edu.cn

Received: June 9 2020; Revised: August 5 2020; Accepted: September 24 2020; Published Online: October 28 2020

Cite this paper:

Chenxi Liu, Dian Jiao and Zhe Liu. Artificial Intelligence (AI)-aided Disease Prediction. BIO Integration 2020; 1(3): 130–136.

DOI: 10.15212/bioi-2020-0017. Available at: https://bio-integration.org/

Download citation

© 2020 The Authors. This is an open access article distributed under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0/). See https://bio-integration.org/copyright-and-permissions/

Abstract

Artificial intelligence (AI) has been widely used in clinical medicine, and it is witnessing increasing innovations in the fields of AI-aided image analysis, AI-aided lesion determination, AI-assisted healthcare management, and so on. This review article focuses on the emerging applications of AI-related medicine and AI-assisted visualized medicine, including novel diagnostic approaches, metadata analytical methods, and versatile AI-aided treatment applications in preclinical and clinical uses, and also looks at future perspectives of AI-aided disease prediction.

Keywords

Artificial intelligence, clinical medicine, deep learning, disease prediction, visualized medicine.

Artificial intelligence

Artificial intelligence (AI) is a new technology that simulates and extends human intelligence in machines that are programmed to mimic human actions [1, 2]. AI mainly includes machine learning (ML), robotics, image recognition, language recognition, neural networks (NNs), natural language processing, and expert systems [3]. The basic content of AI includes research knowledge representation, machine perception, machine thinking, machine behavior, and ML. Among them, ML is the core of AI. ML mainly studies how to make a computer attain a learning ability similar to that of a person and enables it to acquire knowledge automatically through learning [4].

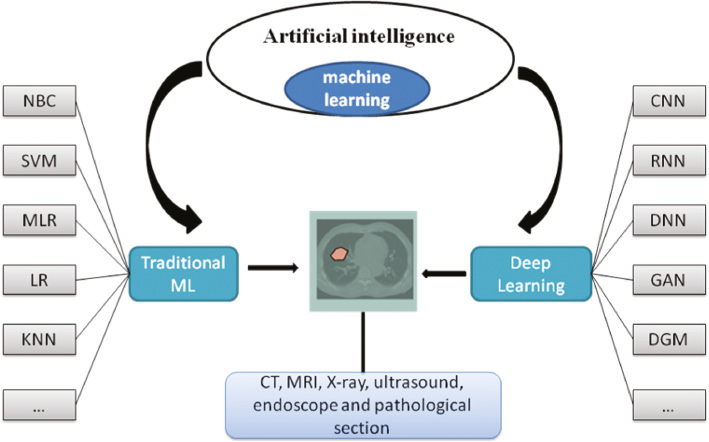

Currently, common algorithms for ML includes: decision trees [5], the naive Bayesian classifier (NBC) [6], the support vector machine algorithm (SVM) [7], random forests, multiple linear regression (MLR), the artificial neural network (ANN) algorithm, boosting and bagging algorithms, logistic regression (LR), and k-nearest neighbor (KNN). Figure 1 show the domain and relationship of AI, ML and medicine. The learning mechanism of ML is to simulate the human ability to acquire knowledge and skills. An ANN is an information processing tool which is connected by multiple perceptrons in a certain way [8, 9]. Similar to neurons in the human brain, neurons stack and connect with each other to form an NN. The input information is transmitted between each neuron, and finally the processed result is output at the end of the network, which includes the learning and cognition of the input data of the entire NN [10]. Among them, the learning style is divided into three parts: 1) supervised learning (with a tutor): tutor signals are included in the input data; 2) unsupervised learning: there is no tutor signal in the input data, and the learning results are classified as typical unsupervised learning, discovery learning, clustering, competitive learning, etc. 3) reinforcement learning: a learning method guided by statistical and dynamic programming techniques that uses environmental feedback (reward/punishment signals) as input.

Figure 1 The domain and relationship of AI, ML and medicine.

Deep learning is a new learning algorithm. This algorithm trains multi-hidden layer perceptrons through massive data, extracts useful features, and obtains functions that can effectively represent training data [11]. Deep learning generally contains more than five hidden layers, each of which enables samples to represent or approach the objective function in different feature spaces, so as to ensure the two basic elements of depth and layer-by-layer training. Through some optimization algorithms, NNs can learn from massive data and build their own knowledge to a very high level [12]. Deep learning mainly includes convolutional neural networks (CNNs), recursive neural network (RNNs), recurrent neural networks (RNNs), deep neural networks (DNNs), generative adversarial networks (GANs), and deep generative models (DGMs) [13].

AI participates in clinical medicine

AI technology can perform a wide range of functions, such as helping in diagnosis and accurate treatment, predicting risks and determining the location of lesion, which can be used to reduce medical errors and improve productivity [14]. In recent years, with the breakthroughs in key technologies related to AI, such as image recognition, in-depth learning and NN algorithms, AI technology has made revolutionary developments, which have been applied in disease screening, auxiliary diagnosis and treatment, case analysis, and other aspects in the field of medical health [15].

Doctors use magnetic resonance imaging (MRI), computed tomography (CT), X-rays and other visualized medical images, combined with AI-assisted analysis to predict and diagnose the disease by screening patients’ disease and clinical symptoms [16, 17]. AI is applied in clinical medical as a tool, which can be used on a disease basis to assist doctors in analysis when analyzing radiation images, AI can also be used on a disease basis to assist doctors in analysis when observing radiation images, such as ophthalmic diagnosis and treatment [18, 19].

To date, AI is mainly used in image-based disease diagnosis in radiology and cardiology. A whole slide imaging method is widely used. This imaging method is an important source of information; the complexity is higher than many other imaging methods because of their large size (usually 100 k × 100 k resolution) and the presence of color information (hematoxylin and eosin [H&E]) staining and immunohistochemistry, there is no obvious anatomical direction in radiology, the information availability of multiple scales (e.g., ×4, ×20), and multiple z-stacking levels (each slice contains a limited thickness, according to the focal plane difference will generate different images) [20].

In other aspects, due to its accuracy and financial issues, AI also has been widely applied in ophthalmology [21]. In the early years, AI was used to identify fundus bleeding and exudation, microaneurysms, and neovascularization in pictures of diabetic retinopathy (DR) patients. With the application of deep learning algorithms in ophthalmology, the application of AI in DR screening, diagnosis, and classification has achieved even more results. The cure rate of ocular complications in diabetic patients is low and it is difficult to control, especially in the later stages of the disease course. Therefore, finding a method with a high diagnosis rate and early diagnosis is of great significance to the effective prevention and treatment of DR. Researchers have iteratively optimized the fundus image classification model and developed a more mature DR-assisted diagnosis model. Ting et al. collected images of human retinas from different countries and races, and developed a deep learning system that can identify and detect possible pictures of DR, glaucoma, and age-related macular degeneration (ARMD). The researchers iteratively optimized the fundus image classification model and developed a more mature DR-assisted diagnosis model. The researchers iteratively optimized the fundus image classification model and developed a more mature DR-assisted diagnosis model. Ting et al. collected images of human retinas from different countries and races, and developed a deep learning system that can identify and detect possible pictures of DR, glaucoma and ARMD [21].

Moreover, AI has been a powerful tool for changing healthcare. Machine learning classification (MLC) has shown great potential in AI-assisted medical diagnosis. Hy Liang et al. used an automatic natural language processing system and a deep learning algorithm to design a way that can simulate a doctor’s face-to-face consultation for reasoning and query the electronic health record (EHR), and extract clinical information from the EHR [22].

AI technology can also play a vital role in alternative scenes. On the one hand, although AI is unlikely to completely replace human health care providers, it can perform certain tasks with higher consistency, speed and, repeatability than human beings. On the other hand, AI also can be a cloud record, personal information is already stored in the hospital’s database.

Perhaps the most powerful function of AI will be as an addition or supplement to human providers. Studies have shown that the synergistic effect of AI and clinicians, performed a better result than when they work separately [23]. The technology based on AI can also enhance real-time clinical decision support, thus improving the research on accurate medicine.

AI could match or even surpass human performance in application programs with specific tasks. Although training requires a large number of data sets, depth learning has shown relative robustness to noise in real environment. The automation capability of AI provides the potential to enhance clinicians’ qualitative expertise, as for tumor prediction, it includes accurate volume measurement and delineation of tumor size over time, tracking of multiple lesions in parallel, translating tumor phenotypic nuances into genotypic meaning, and cross-referencing a single tumor to database to predict the outcome of the case, so that can have potentially infinitely comparable results [24]. Veta et al. showed that, in a tissue microarray (TMA) of male breast cancers, features such as nuclear shape or texture had prognostic value [25]. Lee et al. proposed a feature of benign areas related to tumors and tumors, and used H&E staining and ML instruments to predict the possibility of recurrence within 5 years of prostate surgery [26].

Visualized medicine and its applications

The definition of visualized medicine

Visualized medicine, also known as medical visualization, refers to the application of visualization techniques in medicine. It employs elements from computer graphics to create meaningful, interactive visual representations of medical data, and it has become an influential field of research in many advanced applications. The visual representation uses the user’s cognitive ability to support and accelerate the diagnosis, planning, and quality assurance workflow based on the involved patient data.

The connotation of visualized medicine

The connotation of visualized medicine refers to the application of computer graphics, image processing, and other technologies to reconstruct human tissues, organs and lesions through three-dimensional (3D) reconstruction based on individual clinical information, so as to improve the accuracy and feasibility of medical diagnosis and treatment.

Medical images’ segmentation and extraction

Medical image segmentation is the bottleneck of clinical medicine application. The accuracy of segmentation is very important for doctors to get the real disease information and make an effective diagnosis plan. Generally, image segmentation can be divided into threshold-based segmentation methods, region-based segmentation methods and specific theory-based segmentation methods.

The basic idea of the threshold method is to calculate one or more gray threshold values according to the gray features of the image, and compare the gray value of each pixel in the image with the calculated threshold value, and finally assign the pixels to the corresponding types according to the comparison results. It is simple, efficient, and fast. The most commonly used method is the Otsu threshold method. Later Qin proposed a threshold medical image segmentation algorithm based on improved ant colony algorithm [27]. The basic idea of the region segmentation method is to extract information directly from the image and divide the image into several sub-regions, so that each sub-region has certain characteristics. Traditional regional segmentation methods include the following: the regional growth method and watershed algorithm [28, 29]. In addition to these methods, there are also deep convolutional networks for biomedical image segmentation and segmentation based on the genetic algorithm [30, 31].

The extension of visualized medicine

The epitaxy design of visualized medicine is a cross fusion of several frontier disciplines. According to the current technological frontier development, the epitaxy of visual medicine includes but is not limited to the following contents: CT, MRI, ultrasonography, positron emission computed tomography, and other medical imaging technologies; image registration and image fusion, image segmentation, texture analysis technology, pseudo-color processing technology, 3D image reconstruction and other image processing technology; virtual reality, big data analysis tools, AI equipment and technology. In addition, with the continuous application of visualization in medicine, people’s requirements for visualization gradually increase, and higher requirements are put forward for knowledge discovery from data information, automatic benchmarking of image segmentation and the efficiency of 3D reconstruction.

Visualization and application in clinical medicine

Ultrasonic data image visualization

3D ultrasound (US) technology has been widely used in gynecology, in the cardiovascular system, in abdominal disease examination, ophthalmology, and others [32]. At present, the workstation software of 3D US can be used as the main means of obtaining 3D images using common two-dimensional (2D) ultrasonic diagnosis equipment. Dynamic 3D contrast enhanced ultrasound (CE-US) can form a continuous 3D image in the state of CE-US, which can show the 3D morphology of blood vessels, as well as observe, in depth, the spatial structure and perfusion of blood vessels. Because the 3D reconstruction technology of ultrasonic data image can reconstruct the relationship between the location of space and the location of the tumor, this technology can be vigorously applied in surgery, as well as in the clinical treatment of benign and malignant tumors.

CT US data visualization

The data collected by 64-row spiral CT can be displayed using conventional 2D images, and can also be processed in the later stage to reconstruct 3D images, the surface of the organ, multi-layer surfaces, etc., and display the structure of each layer in real time or nearly real time, which is currently the main inspection method in clinical practice. Because this method has the advantages of high efficiency, good accuracy, super speed, low radiation, and it is noninvasive, it can be applied to lung tumors, for emergency general examinations, the diagnosis of cardiovascular disease, and for oral cavity and craniocerebral examinations, etc.

Visualization of MRI US data

MRI has strong soft tissue discrimination, which is several times better than CT. In addition, the 3D reconstruction of MRI can also be conducted in multiple directions, without electrical isolation radiation, boneless artifacts, and multi-directional imaging. Due to its inherent advantages, 3D MRI has been widely used in muscle examination, joint examination, and central nervous system examination. In recent years, with the rapid development of MRI angiography technology, MRI technology has become more mature, including real-time endoscopy technology, perfusion imaging technology, diffusion imaging technology, functional MRI, and angiography MRI. This technique has been applied to cardiovascular diseases, pulmonary tumor diseases, pancreatic diseases, gastrointestinal diseases, brain diseases, and other diseases.

Deep learning in diagnosis and treatment

The advantages of deep learning applied in diagnosis and treatment

Nowadays, AI has made great breakthroughs in medicine, especially in medical imaging, which is widely used in the diagnosis and treatment of diseases of the brain, heart, lungs, and other organs [33].

With the advancement of ML, especially deep learning, AI-aided disease prediction has attracted increasing attention. Traditional medical images research based on ML analyze the image features specified by doctors, and the generalization ability of the model is weak. Besides, it is difficult to classify the stage of disease development. Compared with the traditional way of looking at medical images, images based on deep learning can quickly complete the initial screening of the images, more comprehensively and stably. Deep learning has the following advantages: 1) high-performance computed processing units (CPUs) and graphics processors; 2) availability of massive amounts of data; 3) development of learning algorithms. Through deep learning, medical images can be classified, segmented and extracted, to assist doctors to complete diagnosis and treatment. Comprehensiveness is reflected in the fact that the machine can observe the whole slice completely without omissions. Stability is reflected in the fact that the machine does not need rest and will not be affected by fatigue, and the diagnostic results are more stable.

Furthermore, for some diseases, the accuracy of AI diagnosis and analysis has reached the level of professional doctors. Shen et al. compared the efficiency of AI and clinicians in disease diagnosis [34]. They did seven studies to compare the specificity, sensitivity, and area under the curve (AUC) between AI and clinicians. It turns out that the diagnostic performance of AI is comparable to that of experts, and is significantly better than clinicians with less experience. Although AI has promoted the development of medical technology, it still lags behind the human brain to some extent. Therefore, the application of AI in medical treatment cannot replace doctors in essence, but can only be applied in the field of medical assistance.

The application of deep learning in diagnosis and treatment

The applications of deep learning in diagnosis and treatment have been developing in various fields, such as for the brain, heart, lungs, and other organs [13].

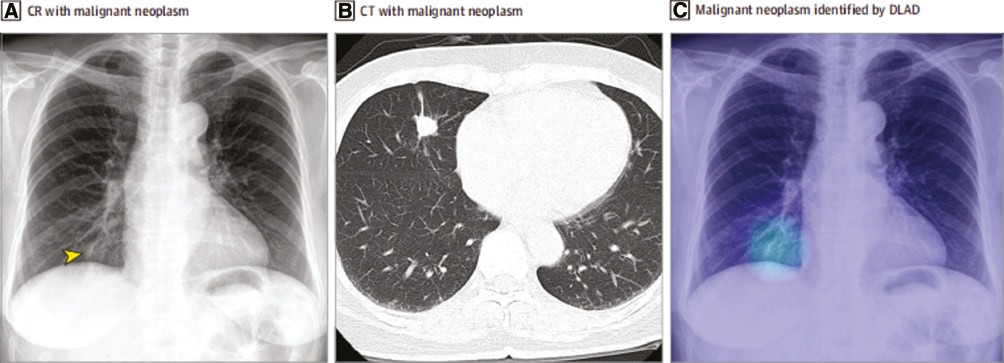

Eui et al. developed an automatic detection algorithm which is based on deep learning (DLAD) for major chest diseases on chest radiographs (CRs) [35]. The results of the DLAD analysis were compared with the doctors to verify their accuracy. Figure 2 show the pneumonia imaging by means of CR, CT, and DLAD. DLAD has three parts: data collection and curation, development of the DLAD algorithm, and evaluation of DLAD’s performance. DLAD is a CNN which contains five parallel classifiers and 26 dense blocks. Four classifiers were for disease and the last classifier was to respond to abnormal analysis results. The localization loss and classification loss were used to train the algorithm, which enhanced the ability to classify and localize the abnormalities. Then, five independent data sets were collected and curated to evaluate the DLAD’s consistency. Five radiologists selected and marked the abnormal location of CRs. Finally, the results of the DLAD analysis were compared with that of the radiologists.

Figure 2 The pneumonia imaging by means of CR, CT, and DLAD. A. CR shows the nodular opacity which is pointed by the arrow. B. CT shows the nodular opacity at the right lobe. C. DLAD localized the lesion accurately. (Reproduced from E. J. Hwang et al., Development and validation of a deep learning–based automated detection algorithm for major thoracic diseases on chest radiographs, JAMA Netw. 2 (2019) 191095, with the permission of American Medical Association Ltd.).

The DLAD can classify abnormal or normal areas and process the independent data efficiently, it even has a higher performance than radiologists. This optimizing algorithm will improve the quality and efficiency of diagnosing major thoracic diseases.

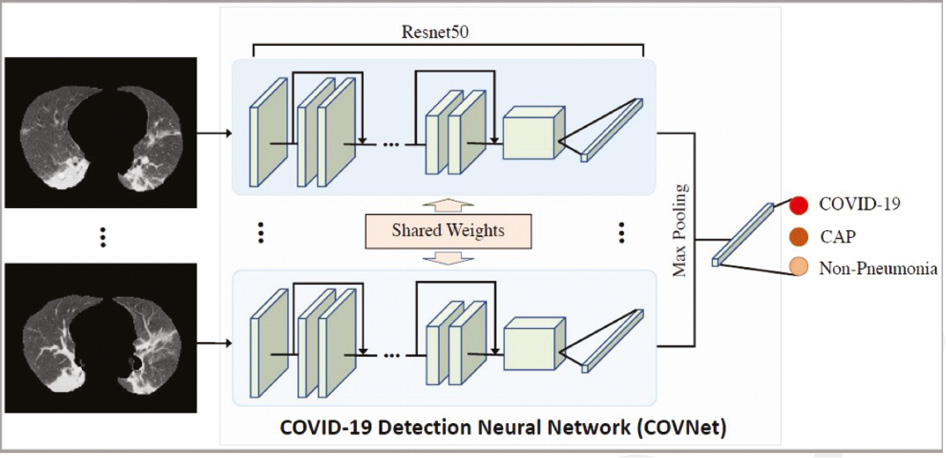

Li et al. designed a 3D deep learning model that can be used to detect COVID-19 from a chest CT [36]. With COVID-2019 spreading all over the world, it has become a global problem. The visual features are extracted from a chest CT to detect COVID-19. Figure 3 show the 3D deep learning framework of the COVNet. Community-acquired pneumonia (CAP) and other non-pneumonia can also be tested for using chest CTs. This program verifies this model’s robustness. Using RestNet50 as a backbone and inputting a series of CT slices it can produce corresponding features. The features of all the slices are extracted in the maximum-pooling operation. The final feature is input to the connected layer and the probability score is found.

Figure 3 The 3D deep learning framework of the COVNet. (Reproduced from L. Li et al., Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology. 19 (2020) 200905, with the permission of Radiological Society of North America Inc Ltd.).

In the fight against COVID-19, the deep learning model is a desired method to distinguish COVID-19 and CAP using chest CTs.

Development trend of AI-aided visualized medicine

Recently, AI has been developing rapidly in many areas, including hospital management, drug mining, medical imaging, clinical decision support, health management, pathology. Nowadays, research using medical images based on AI focuses on the analysis of various types of medical image such as CT, MRI, X-rays, US, endoscopy and pathological sections, and includes research on diseases such as lung, breast, skin, brain, and ophthalmic diseases. In medical image recognition, AI can improve the working efficiency of doctors. Nevertheless, there are many challenges in medical image analysis. The doctor needs to combine the useful information in the image with the patient’s age, region, medical history, etc., to make a more accurate judgment. There is a bottle-neck problem in that the clinical data may be overwhelmed by the massive image data in the deep learning NN [37]. Therefore, it is important to balance the image data and clinical data. In addition, doctors make judgments based on anatomical information. As deep learning is mostly patch classification, the anatomical location is usually unknown to the computer, the doctor cannot make a correct judgment.

Despite that these problems show that the application of AI in visualized medicine is still at the infant stage, it can be assumed that new progress and breakthroughs of the AI-aided disease prediction and visualized medicine will be continuously being developed, and that AI in visualized medicine will exhibit its tremendous potentials and boost rapid applications for clinicians in the coming decades.

Conflict of interest

The authors declare no conflicts of interest.

References

- Wooldridge M, Jennings NR. Intelligent agents: theory and practice. Knowl Eng Rev 1995;10:115-2. [DOI: 10.1017/S02698889000 08122]

- Jiang F, Jiang Y, Zhi H, Dong Y, Li H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2017;2:230-43. [PMID: 29507784 DOI: 10.1136/svn-2017-000101]

- Jin W. Research Progress and Application of Computer Artificial Intelligence Technology. MATEC Web of Conferences; 2018. pp. 176. [DOI: 10.1051/matecconf/201817601043]

- Huang G, Huang GB, Song S, You K. Trends in extreme learning machines: a review. Neural Netw 2015;61:32-48. [PMID: 25462632 DOI: 10.1016/j.neunet.2014.10.001]

- Domingos P, Pazzani M. On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 1997;29:103-30. [DOI: 10.1023/A:1007413511361]

- Cortes C, Vapnik V. Support-vector networks. Mach Learn 1995;20:273-97. [DOI: 10.1007/BF00994018]

- Cao DS, Xu QS, Liang YZ, Chen XA, Li HD. Automatic feature subset selection for decision tree-based ensemble methods in the prediction of bioactivity. Chemom Intell Lab Syst 2010;103:129-36. [DOI: 10.1016/j.chemolab.2010.06.008]

- Watts DJ, Strogatz SH. Collective dynamics of ‘small-world’ networks. Nature 1998;393:440-2. [PMID: 9623998 DOI: 10.1038/30918]

- Russell S, Norvig P. Artificial intelligence: a modern approach. Saddle River, NJ: Prentice Hall; 1994.

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. [PMID: 26017442 DOI: 10.1038/nature14539]

- Schmidhuber J. Deep learning in neural networks an overview. Neural Netw 2015;6:85-117. [PMID: 25462637 DOI: 10.1016/j.neunet.2014.09.003]

- Arel I, Rose DC, Karnowski TP. Deep machine learning a new frontier in artificial intelligence research. IEEE Comput Intell Mag 2010;5:13-8. [DOI: 10.1109/MCI.2010.938364]

- Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402-10. [PMID: 27898976 DOI: 10.1001/jama.2016.17216]

- Kermany DS, Goldbaum M, Cai W, Valentian CCS, Liang H, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 2018;172:1122-31. [PMID: 29474911 DOI: 10.1016/j.cell.2018.02.010]

- Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017;542:115-8. [PMID: 28117445 DOI: 10.1038/nature21056]

- Cheng JZ, Ni D, Chou Y-H, Tiu C-M, Chang Y-C, et al. Computer-aided diagnosis with deep learning architecture: applications to breast lesions in US images and pulmonary nodules in CT scans. Sci Rep 2016;6:24454. [PMID: 27079888 DOI: 10.1038/srep24454]

- Goodfellow I, Bengio Y, Courville A. Deep learning. Cambridge, MA: Massachusetts Institute of Technology Press; 2016.

- Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA 2013;309:1351-2. [PMID: 23549579 DOI: 10.1001/jama.2013.393]

- Sitapati A, Kim H, Berkovich B, Marmor R, Singh S, et alEl-Kareh, Clay, Ohno-Machado. Integrated precision medicine: the role of electronic health records in delivering personalized treatment. Wiley Interdiscip Rev Syst Biol Med 2017;9:1-12. [PMID: 28207198 DOI: 10.1002/wsbm.1378]

- Niazi MK, Parwani AV, Gurcan MN. Digital pathology and artificial intelligence. Lancet Oncol 2019;20:253-61. [PMID: 31044723 DOI: 10.1016/S1470-2045(19)30154-8]

- Ting DS, Pasquale LR, Peng L, Campbell JP, Lee AY, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol 2019;103:167-75. [PMID: 30361278 DOI: 10.1136/bjophthalmol-2018-313173]

- Liang H, Tsui BY, Ni H, Valentim CC, Baxter SL, et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat Med 2019;25:433-8. [PMID: 30742121 DOI: 10.1038/s41591-018-0335-9]

- Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017;318:2211-23. [PMID: 29234807 DOI: 10.1001/jama.2017.18152]

- Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention; 2015. pp. 234-41. [DOI: 10.1007/978-3-319-24574-4_28]

- Veta M, Kornegoor R, Huisman A, Verschuur-Maes AHJ, Viergever MA, et al. Prognostic value of automatically extracted nuclear morphometric features in whole slide images of male breast cancer. Mod Pathol 2012;25:1559-65. [PMID: 22899294 DOI: 10.1038/modpathol.2012.126]

- Lee G, Sparks R, Ali S, Shih NNC, Feldman MD, et al. Co-occurring gland angularity in localized subgraphs: predicting biochemical recurrence in intermediate-risk prostate cancer patients. PLoS One 2014;9:5-e97954.

- Qin J, Shen X, Mei F, Fang Z. An Otsu multi-thresholds segmentation algorithm based on improved ACO. J Supercomput 2019;75:955-67.

- Horbelt M, Vetterli M. Unser. High-quality wavelet splitting for volume rendering. Wavelets and Applications Workshop. 1998:24-39.

- Bergner T, Möller M, Drew MS, Finlayson GD. Interactive spectral volume rendering. Proceedings of the conference on Visualization. 2002;02:101-8. [DOI: 10.1109/VISUAL.2002.1183763]

- Faust, Nick L. Radar sensor technology and data visualization. Bellingham, WA; 2002;4744:3-7.

- Zhou M, Zhou Y, Liao HJ, Rowland BC, Kong XQ, et al. Diagnostic accuracy of 2-hydroxyglutarate magnetic resonance spectroscopy in newly diagnosed brain mass and suspected recurrent gliomas. Neuro-oncology 2018;20:1262-71. [PMID: 29438510 DOI: 10.1093/neuonc/noy022]

- Currie G. Intelligent imaging: artificial intelligence augmented nuclear medicine. J Nucl Med Technol 2019;47:217-22. [PMID: 31401616 DOI: 10.2967/jnmt.119.232462]

- Choi C, Raisanen JM. Prospective longitudinal analysis of 2-hydroxyglutarate magnetic resonance spectroscopy identifies broad clinical utility for the management of patients with IDH-mutant glioma. J Clin Oncol 2016;34:4030-9. [PMID: 28248126 DOI: 10.1200/JCO.2016.67.1222]

- Shen JY, Zhang JP, Jiang BS. Artificial intelligence versus clinicians in disease diagnosis: systematic review. JMIR Med Inform 2019;16:10010. [PMID: 31420959 DOI: 10.2196/10010]

- Hwang EJ, Park S, Jin K-N, Kim JI, Choi SY, et al. Development and validation of a deep learning–based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw 2019;2:191095. [PMID: 30901052 DOI: 10.1001/jamanetworkopen.2019.1095]

- Li L, Qin L, Xu Z, Yin Y, Wang X, et al. Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology 2020;19:200905. [PMID: 32191588 DOI: 10.1148/radiol.2020200905]

- Currie G, Hawk KE, Rohren E, Klein AVR. Machine learning and deep learning in medical imaging: intelligent Imaging. J Med Imaging Radiat Sci 2019;50:477-87. [PMID: 31601480 DOI: 10.1016/j.jmir.2019.09.005]